The New Distribution Game: Building Products for Agents, Not Users

It’s Not About Attention Anymore. The Next Platform War Is Invocation.

Imagine you’ve nailed your onboarding, user love is high, and growth is steady. Then one day your traffic plummets. Users aren’t vanishing. Their agents are making decisions but you’re not the default choice. Welcome to the invocation era!

For the last two decades, the tech world’s real currency was attention. Whoever controlled the user’s attention owned distribution: Google with search, Apple with app stores, Facebook with the feed. But there’s a new game now, and the rules are being rewritten at breakneck speed.

It’s no longer about what a user sees. It’s about how easily an AI agent can access and use your product to get things done without friction, and at machine speed.

1. The Rise of AI Clients & Callable Products

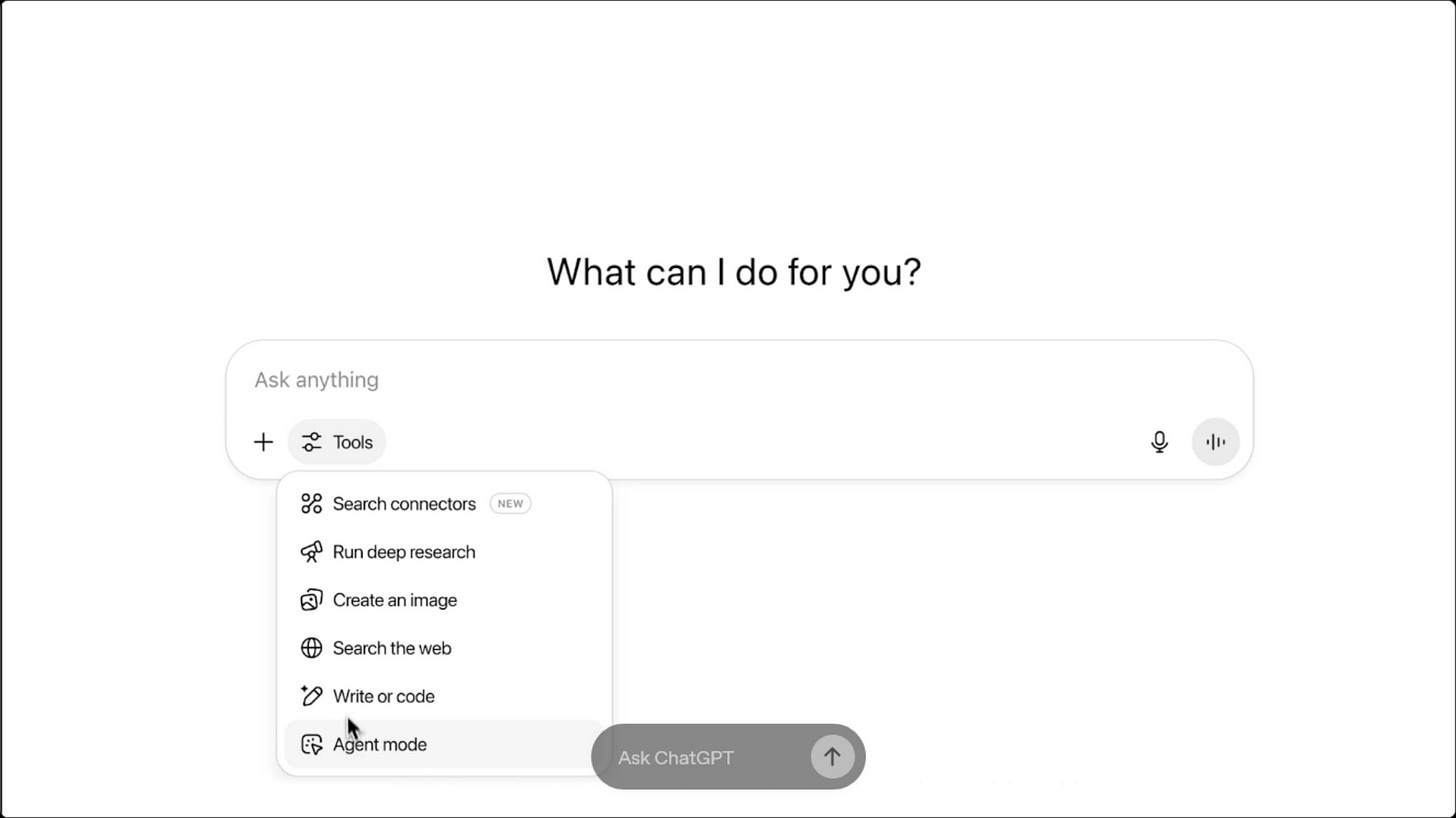

A new interface layer is emerging: the AI client. Products like ChatGPT, Claude, and Cursor aren’t just answering questions. They’re acting as your bespoke operator, taking action across dozens of apps and APIs, stitching together workflows, and making decisions you once made by hand.

In this world, callable products (products that can be directly invoked by models) become the new gatekeepers of distribution. If your service, tool, or workflow isn’t exposed in a way these agents can call upon, you become invisible, no matter how polished your home page or onboarding flow.

2. ChatGPT Agent Mode Signals the Demand Shift — But It’s Not (Yet) Open

The unveiling of ChatGPT’s Agent Mode this week isn’t just an incremental feature. It’s tectonic and it shows us where demand is heading. Agent Mode turns ChatGPT from a conversational assistant into an autonomous, workflow-driving operator. It sequences tasks, interacts with tools, holds context, and executes end-to-end jobs without direct human steering.

Let’s break that down:

Task Chaining: The agent hears “Plan our Q4 offsite” and handles calendaring, location scouting, booking, and payments; possibly across multiple tools.

End-to-End Execution: These aren’t one-shot API calls. They’re multi-step decisions with context, memory, and retries.

User Delegation Norms: As users get comfortable delegating full workflows to agents, the expectations of products shift too: composability, predictability, and programmatic clarity become the new UX.

👉 But here’s the key: ChatGPT’s Agent Mode currently relies on custom logic and integrations meaning it’s hand-built, not open-discovery. This creates a massive opportunity for standardized invocation.

“Users are ready to let agents act on their behalf — so now, agents need a standard way to find, understand, and safely invoke products.”

This shifts the product landscape: How do we design products that agents choose intuitively?

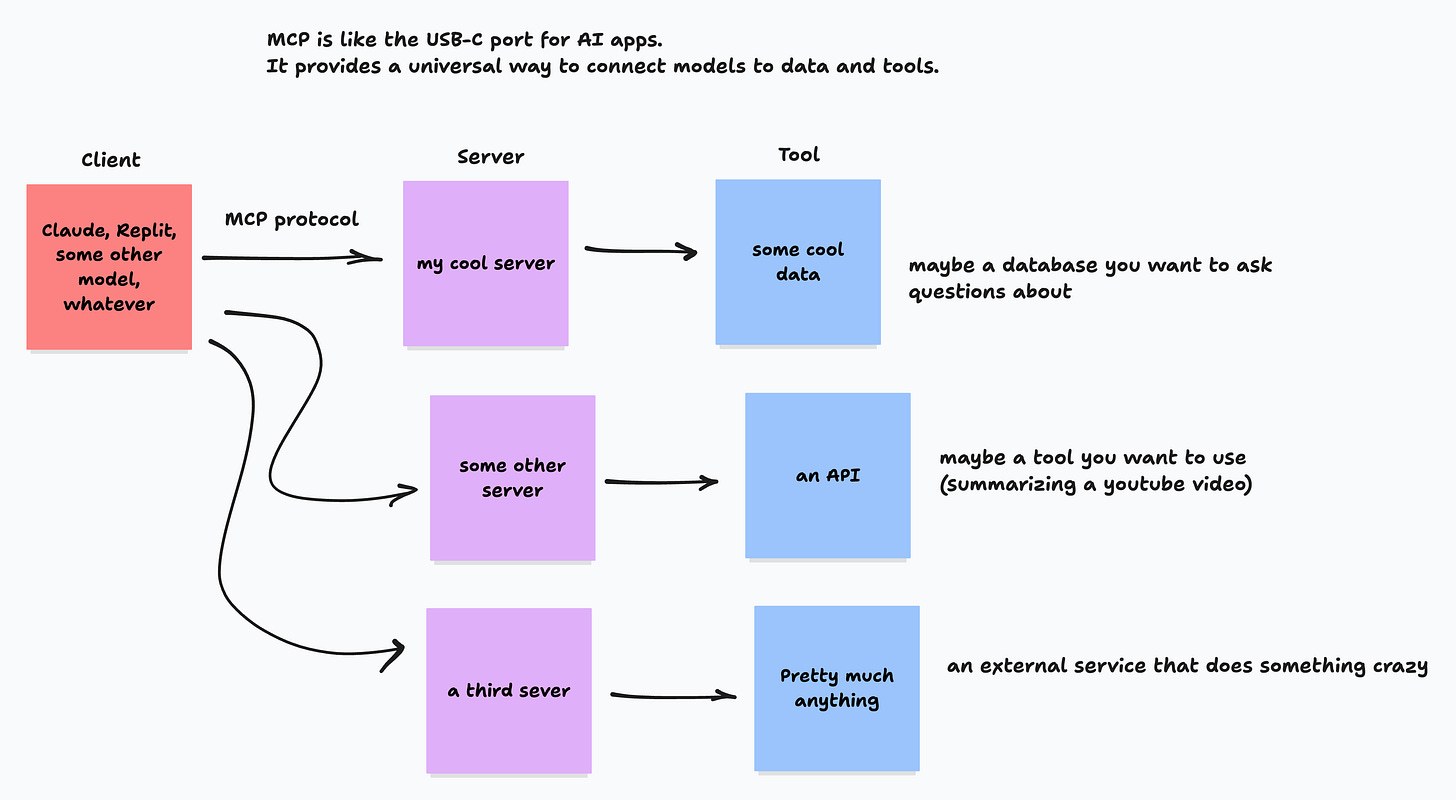

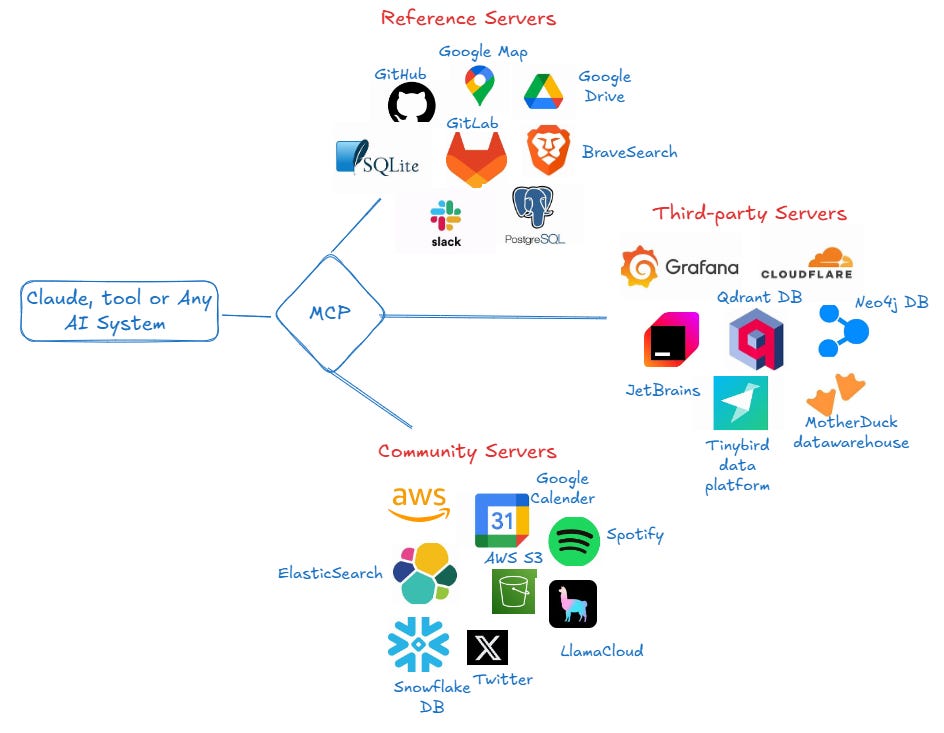

3. The Model Context Protocol: Key to the New Software Stack

Model Context Protocol (MCP) is the emerging lingua franca of agent integration and are being coined as the “USB-C for models and actions” letting you expose what your product can do, and how, in a format models understand. MCPs streamline integration by providing capabilities that unlock:

Agents discovering tools, permissions, and actions with zero guesswork.

No brittle, custom integrations; no ad-hoc hacking.

Whether you’re a SaaS giant or a three-person startup, MCP levels the playing field: if your actions are described, agents can find and use them.

4. Discoverability in the Age of MCP: The New Product Battleground

If you build the best travel engine, but the agent calls your competitor’s API because of a partnership, schema fit, or faster response time, you never get to the table even if users want you. With potentially hundreds of MCP-compliant endpoints proliferating, how does your product stand out to agents hunting for the perfect tool to fulfill a user’s intent?

To ensure discoverability in a future where hundreds of MCPs exist you must ensure your product offers:

Clear, semantic descriptions of actions, inputs, and outputs (not just technical fields, but business-relevant intent and usage scenarios).

Rich metadata for permissions, usage restrictions, and context, making it easy for agents to interpret what’s safe, relevant, and applicable.

Fast, reliable endpoints that support low-latency, conversational interactions as agents will default to tools that respond quickly and handle volume gracefully.

Centralized and unified architecture, so that a single MCP surfaces broad, cohesive capabilities rather than fragmented services.

5. Metrics, Metrics and Metrics

As Product Managers, we need a new lens on metrics. While traditional dashboards like DAU, retention, and conversion are still important, they don’t reveal how visible or valuable your product truly is to agents. Let’s break it down.

1. Invocation Rate

What it tells you: How often your product is being invoked by agents (Copilot, Cursor, Perplexity) relative to peers or similar tools.

Metric: # of Agent Calls per Day / Session

Why it matters: Invocation is the new homepage visit. If agents aren’t calling your product, you’re losing discoverability.

2. Invocation Share

What it tells you: Out of all eligible tools or APIs in a domain (e.g., “summarize PDF”), what percentage of invocations does your product get?

Metric: Your Tool Invocations / Total Invocations in Category

Why it matters: Helps benchmark competitive discoverability. Losing share? Your MCP metadata or capabilities may be under-optimized.

3. First-call Success Rate

What it tells you: When an agent calls your product, does it work well enough on the first try to meet the user’s intent?

Metric: Successful Outcomes / Total Agent Invocations

Why it matters: Agents optimize for reliability. If you return errors, ambiguous results, or slow responses, you’ll get deprioritized fast.

4. Agent Feedback Signals

What it tells you: Are agents giving implicit feedback like retrying with another tool, rephrasing, or manually overriding your invocation?

Examples:

Retry Rate

Re-invocation of different tool for same task

User manual selection override

Why it matters: These signals often happen silently. They're your new churn indicators.

5. Metadata Completeness Score

What it tells you: How well-described and interoperable your tool is within MCP clients.

Metric: Percentage of completed fields in MCP schema (capabilities, examples, usage hints, categories)

Why it matters: Well-documented products with clear affordances are more likely to be discovered and invoked.

6. Latency-to-Invocation Dropoff

What it tells you: How sensitive your invocation rate is to performance and latency.

Metric: Invocation Dropoff (%) vs. Response Time (ms)

Why it matters: Agents prefer fast tools. You may be losing invocations purely on latency, not capability.

TL;DR: Think Like an API, Compete Like an App Store

6. Challenges & Open Questions

A new stack this powerful comes with risks, riddles and a few what ifs:

Will giants like OpenAI, Apple, Microsoft or Google fork MCP for their own platforms? Or will open standards win?

How do we handle safety, abuse, throttling, billing, and identity, now that thousands of actions can be triggered per second by agents, not humans?

Who decides what gets promoted and why? Do we end up with “sponsored invocation” and a pay-to-play ecosystem at the agent layer?

What does governance look like when agents can transact and orchestrate on our behalf at scale?

In the coming decade, your model-native products don’t need to be pretty. They need to be callable, composable, and the invisible backbone for agentic workflows.

If you’re building anything model-forward or callable by default, I want to jam. Let’s trade notes, team up something weird, or help each other win this shift. Hit me up on LinkedIn!